Join Fusion’s SEO team as we round up last month’s major industry updates.

PAGE EXPERIENCE ALGORITHM NOW ROLLING OUT

On 15th June Google announced the rollout of its much-anticipated Page Experience algorithm.

Incorporating the new Core Web Vitals metrics, the algorithm measures a range of factors broadly related to page usability and user experience, including:

- Page speed

- Interactivity

- Visual stability

- Mobile-friendliness

- Safe browsing

- HTTPS usage

- Usage of intrusive interstitials

Sites that are marked as optimal across the above factors will be considered as offering good page experience and may be potentially favoured in SERPs as a result. However, according to Google sites should not expect to see drastic changes as an immediate result of the update.

Although the current update only applies to mobile devices, Google has confirmed that Page Experience will become a ranking factor for desktop in the near future. A timeline for this has not yet been set out, with an announcement expected closer to the time of release.

To find out more about what to expect from the new update and our approach to measuring Page Experience, read our dedicated blog post here.

BROAD CORE ALGORITHM UPDATE RELEASED ON 2nd JUNE

Prior to the release of the planned Page Experience update, earlier in June Google rolled out a previously unannounced broad core update.

Referred to as the June 2021 Core Update, the release began to roll out on the 2nd of June and finished around the 12th. Unusually, Google announced that this would be a two-part update, with the 2nd round of updates taking place at some point in July. Google’s Danny Sullivan clarified:

“Some of our planned improvements for the June 2021 update aren’t quite ready, so we’re moving ahead with the parts that are, then we will follow with the rest with the July 2021 update. Most sites won’t notice either of these updates, as is typical with any core updates.”

Google have not disclosed any exact details as to the changes made in the two updates, simply stating the update is fairly typical and that sites may see a negative, positive, or negligible impact. As has become usual with core updates, Google also maintained that there’s nothing in particular for webmasters to do in response.

… AND A NEW TWO-PART SEARCH SPAM UPDATE

If the Page Experience and June / July 2021 updates were not enough, in late June Google also released another update, this time targeting “search spam”.

Rolling out in two parts, the first release started on the 23rd and completed within a single day, with the second following up a week later on the 28th. Confirming the update on the 23rd, Google stated:

“As part of our regular work to improve results, we’ve released a spam update to our systems. This spam update will conclude today. A second one will follow next week.”

Clarification has not been provided on the exact types of spam targeted in the releases, with Google simply advising webmasters to follow their best practice guidelines for search.

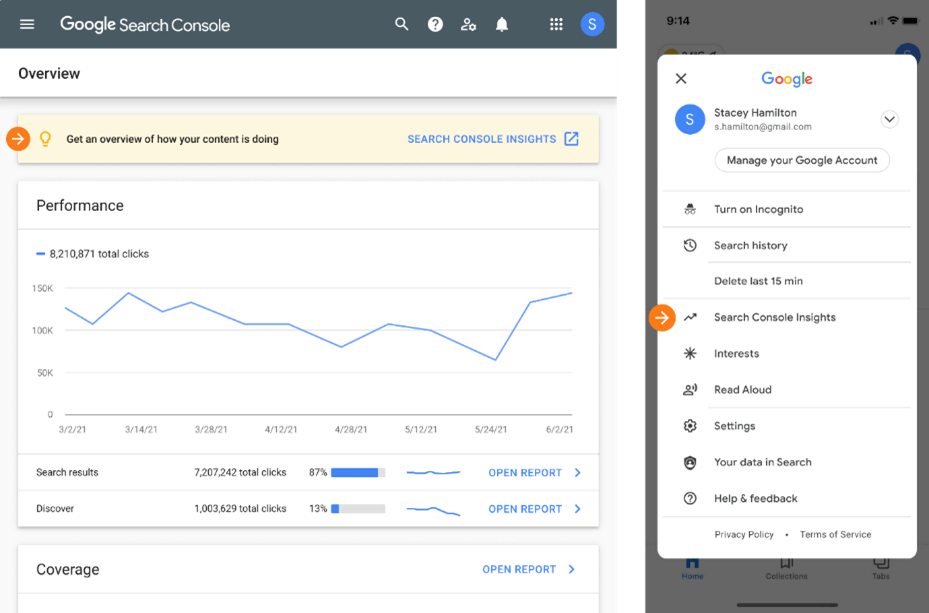

GOOGLE LAUNCHES SEARCH CONSOLE INSIGHTS

Google Search Console received another round of features in June, with the addition of a new “Insights” report.

Insights joins together data from Google Search Console and Google Analytics in an effort to make it easier to analyze the performance of site content. In Google’s words, Insights aims to help site owners answer the below questions:

- “What are your best performing pieces of content, and which ones are trending?”

- “How do people discover your content across the web?”

- “What do people search for on Google before they visit your content?”

- “Which article refers users to your website and content?”

The new tool began rolling out in mid-June and should now be available to most Google Search Console users. Site owners can either access Insights directly through Search Console, or via a new portal on the Google site.

ROBOTS.TXT ON SHOPIFY SITES NOW EDITABLE

Owners of Shopify sites are now able to manually upload and edit robots.txt files. The new feature was announced on Twitter by Shopify CEO Tobi Lutke, and as of 21st June should be fully rolled out.

Shopify had previously only applied default robots.txt files to all websites, with no clear workaround should webmasters need to edit the file. However, the file can now be manually changed via the robots.txt.liquid theme template, with site owners able to:

- Block certain crawlers

- Disallow (or allow) certain URLs from being crawled

- Manually add extra sitemap URLs

- Add crawl-delay rules for specific crawlers.

While Shopify maintains that the default robots.txt “works for most stores”, the new functionality ultimately gives greater control to site owners and is likely to be welcomed by SEOs working with Shopify sites.

If you found this update useful, check out our latest blog posts for the latest news, and if you’re interested in finding out more about what we can do for your brand, get in touch with the team today.

by Joshua Carter