Google warns of harsh penalties for repeated guideline violations

Google has warned that sites found repeatedly violating webmaster guidelines, or that have a “clear intention to spam”, could face harsher manual action penalties.

Usually, if a site receives a manual penalty for violating guidelines, they need to rectify the violation and send a reconsideration request to Google in order for this to be revoked.

However, if after a positive reconsideration request a site then proceeds to further violate guidelines, the new blog post states that “further action” will be undertaken.

This “further action” will make any future reconsideration requests more difficult to carry out, less likely to be accepted, and in general reduce the chance of any manual actions being removed.

Summing this up, Google state that “In order to avoid such situations, we recommend that webmasters avoid violating our Webmaster Guidelines [in the first place], let alone [repeat this]”.

HTTPS acts as a “tiebreaker” in search results

In a recent video hangout, Google’s Webmaster Trends Analyst Gary Illyes emphasised again the slight ranking boost given to HTTPS sites, clarifying it as a “tiebreaker”.

In situations where the quality signals for two separate sites are essentially equal, if one site is on HTTP and one is on HTTPS, the HTTPS site will be given a slight boost. This reflects Google’s recent attitude towards HTTPS; whilst Google doesn’t regard encryption as essential, it is heavily recommended.

This doesn’t mean that HTTP is viewed as a negative by Google, and Illyes clarified that it’s still “perfectly fine” for a website to not be HTTPS.

However, whilst having a site on HTTPS alone isn’t enough to result in a positive SERP ranking, “if you’re in a competitive niche, then it can give you an edge from Google’s point of view”.

Google hints that structured data could be used as a ranking factor

Although data that is relevant to a specific site or niche works to make a sites SERP snippets richer, and in turn could potentially improve CTR, it’s not something currently used by Google as a ranking factor.

However, new comments – alongside the fact that Google now issues penalties for improper schema implementation -suggest that this could change in future. Acknowledging the usefulness that structured markup can have to users, Mueller stated that “over time, I think it is something that might go into the rankings”.

Mueller gave a brief example of how this might work, saying that in a case where a person is searching for a car, “we can say oh well, we have these pages that are marked up with structured data for a car, so probably they are pretty useful in that regard”.

However, it was emphasised that this wouldn’t be used as a sole ranking signal, and that a site would need to have “good content” as well being technically sound in order to benefit from any potential structured data ranking factors.

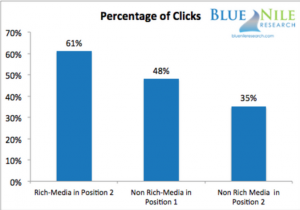

Study finds increased CTR on position 2 results with rich snippets

Market research company Blue Nile Research has suggested in a new study that rich snippets could shift CTR percentage from position 1 to position 2.

The study compared responses to three scenarios; a result in position 1 with no rich snippets, a result in position 2 with rich snippets (such as stars, images, videos etc), and a result in position 2 with no rich snippets.

A comparison of clicks for each scenario found a 61% click share for the position 2 with rich media, whereas the position 1 with no rich media had only 48% click share. Meanwhile, position 2 with no rich snippets had the lowest click share at 35%.

The study looked at the search habits of 300 people in a lab environment, and as such doesn’t necessarily give the most accurate representation of natural user activity. However, it does suggest that structured markup and rich snippets have a valid part to play when considering how to boost click through rate.

Google says linking externally has no SEO benefit

Although it’s common knowledge that gaining links from good quality sites can have a positive SEO benefit, the effect of linking out externally hasn’t always been as clear cut.

It’s often been thought that whilst not comparable to earning links, linking to external sites could provide a marginal search benefit. Although not ever explicitly confirmed, this belief has been reinforced by Google; in 2009, Matt Cutts stated that “in the same way that Google trusts sites less when they link to spammy sites or bad neighbourhoods, parts of our system encourage links to good sites”.

However, new comments have suggested that this isn’t the case. When asked “is there a benefit of referencing external useful sites within your content?”, Google’s John Mueller clarified that “It is not something that we would say that there is any SEO advantage of”, but that “if you think this is a link that helps users understand your site better, then maybe that makes sense.”

So, although linking eternally appears to have no direct SEO benefit, it should still be considered as a valuable part of creating a user friendly site architecture.

by Joshua Carter