BERT, or the Bidirectional Encoder Representations from Transformers, is the new search algorithm released by Google. BERT is a natural language processor (NLP) that is capable of providing Google with further understanding behind the intention of a search query, rather than relying solely on key terms within the query in order to understand user intention.

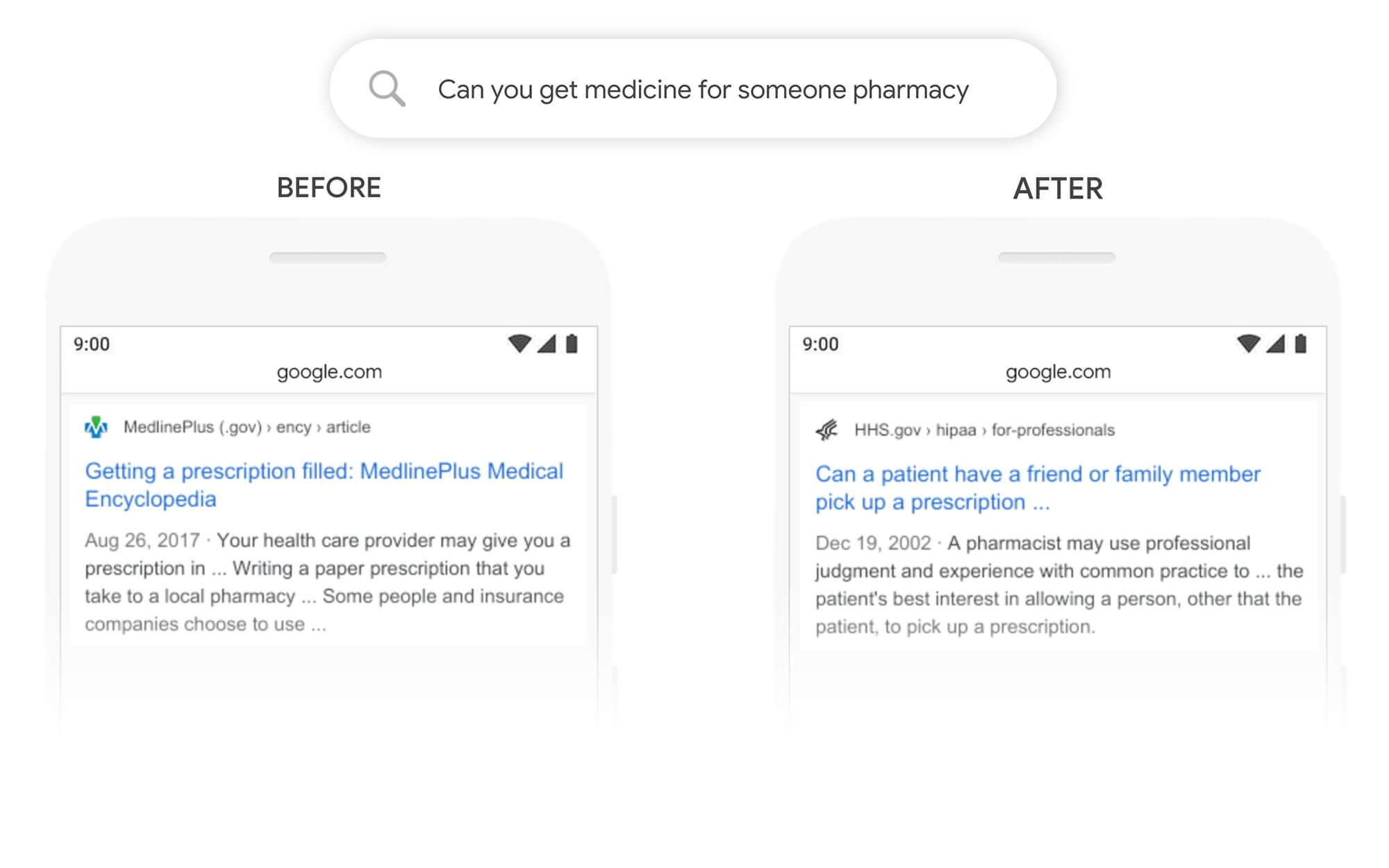

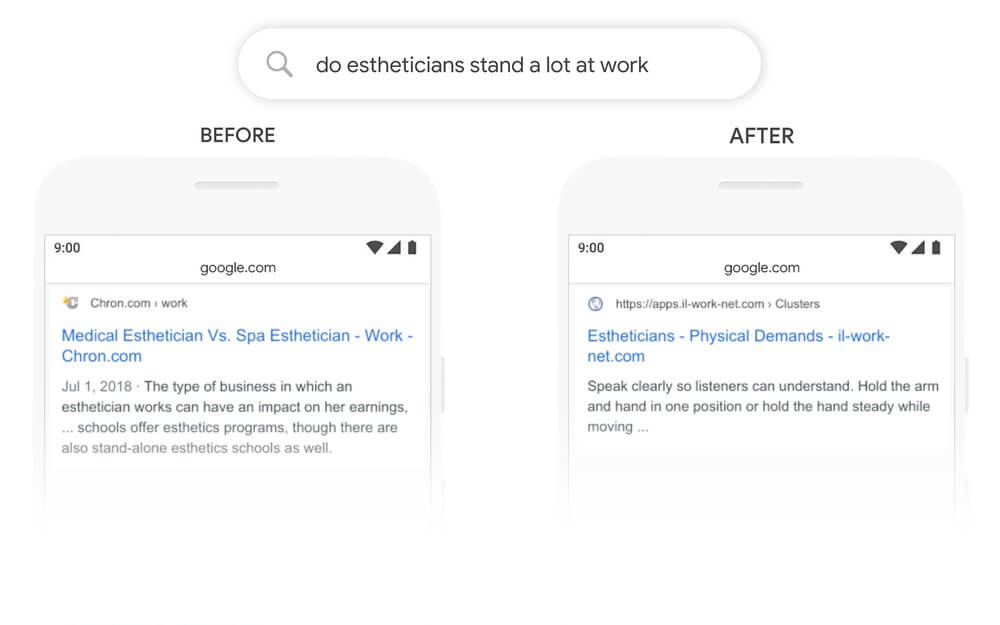

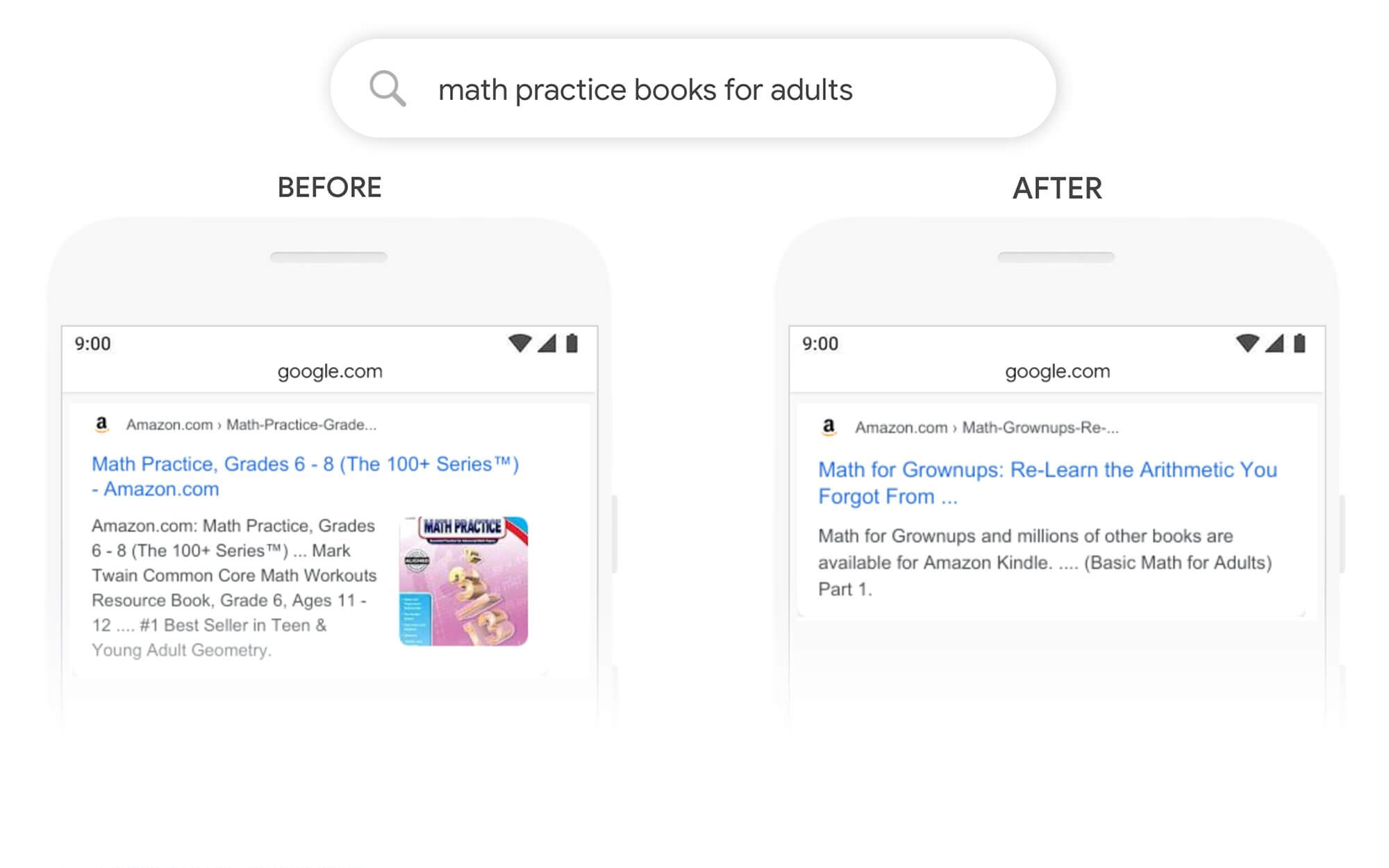

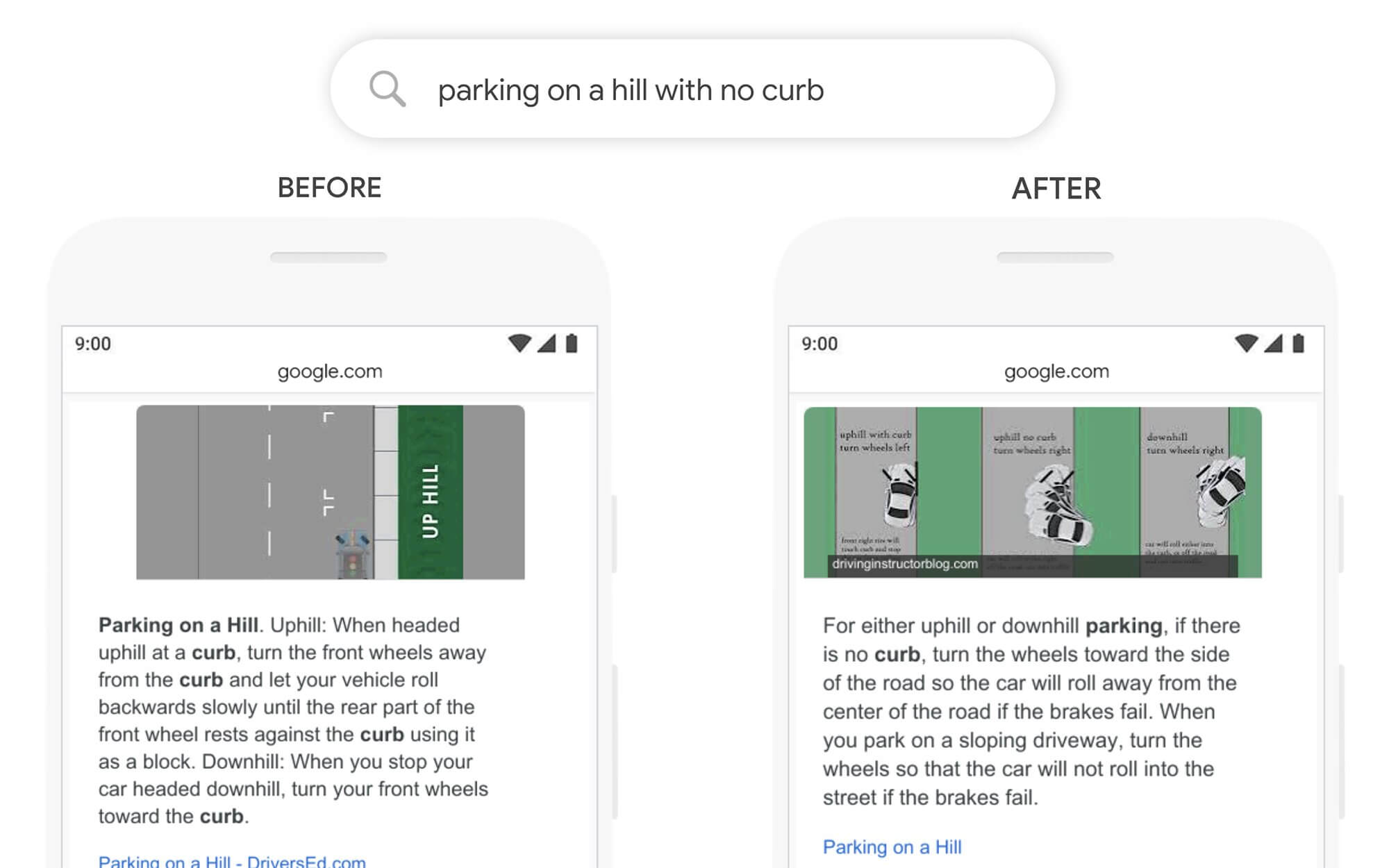

This new algorithm is going to allow Google to do a much better job of finding useful information for search queries, particularly for longer, more conversational queries, or searches where prepositions like “for” and “to” matter a lot to the meaning, Search will be able to understand the context of the words in your query.

Looking at the below examples, we can see how BERT is able to understand more complex, content driven search terms, in order to provide more relevant search results.

BERT is currently live for search terms using the English language and Google will be looking to apply this to other languages in the future.Google have labelled BERT as “representing the biggest leap forward in the past five year, and one of the biggest leaps forward in the history of search”. This no understatement from Google, as BERT will work alongside RankBrain and is expected to affect 10% of all search terms, along with affecting structured snippets.

As BERT is a deep learning NLP algorithm that is designed to process and learn how language is used, the only way to optimise content for this is to ensure that content is relevant, informative, useful and most importantly, written for humans.

by Zack Cornick